Mission

The ASP Lab develops the mathematical foundations of information processing by uncovering universal algebraic structures that transcend specific domains. We build rigorous theory that explains why modern learning architectures work and design principled new methods with provable guarantees for stability, transferability, and performance.

Our research philosophy: Structure reveals insight. Abstraction enables transfer. Rigor ensures reliability.

Research Themes

1. Graphon Signal Processing & Large-Scale Networks

We develop signal processing frameworks for graphons—the continuum limits of large graphs. This enables analysis and design of algorithms that work consistently as networks scale, with applications to power systems, social networks, and infrastructure optimization. Our graphon pooling and sampling methods provide transferable learning guarantees for graph neural networks operating across different network sizes.

Key contributions: Graphon pooling operators, sampling and uniqueness theorems, stability analysis for aggregation GNNs

2. Algebraic Foundations of Deep Learning

We use representation theory and C*-algebras to understand convolutional neural networks as algebraic objects. This perspective reveals why certain architectures generalize well, provides stability guarantees to input deformations, and enables principled design of new architectures for non-Euclidean domains including manifolds, point clouds, and Lie groups.

Key contributions: Algebraic neural network stability theory, RKHS convolutional filters, Lie group algebra filters

3. Signal Processing on Non-Commutative Structures

Traditional signal processing assumes commutativity—that operations can be reordered without consequence. We extend these frameworks to non-commutative algebras, enabling signal processing on multigraphs, directed networks, and other structures where order matters. This opens new possibilities for analyzing complex relational data.

Key contributions: Non-commutative convolutional filtering, multigraph signal processing, quiver signal processing

4. Optimal Sampling & Reconstruction Theory

We develop sampling strategies with provable optimality guarantees for signals on graphs and other irregular domains. Our blue-noise sampling methods adapt classical signal processing principles to network settings, enabling efficient data collection and reconstruction in applications from sensor networks to medical imaging.

Key contributions: Blue-noise graph sampling, optimal sampling sets in structured graphs, spectral zooming

Lab Culture & Philosophy

We value:

- Mathematical rigor grounded in real applications

- Collaborative exploration of hard problems

- Clear communication of complex ideas across audiences

- Interdisciplinary thinking that bridges pure mathematics and engineering

- Mentorship and professional development

Lab members develop deep technical expertise while learning to communicate their work effectively to diverse audiences.

Opportunities for Prospective Students

We are recruiting!

The ASP Lab welcomes motivated graduate students interested in the mathematical foundations of machine learning, signal processing, and network science. Ideal candidates have:

Strong background in:

- Linear algebra and mathematical analysis

- Probability and optimization

- Signal processing or machine learning fundamentals

- Programming (Python, MATLAB, or similar)

Interest in developing:

- Deep theoretical understanding of learning systems

- Rigorous mathematical frameworks

- Applications to real-world problems in power systems, networks, or data science

How to Apply

Prospective PhD students should apply through the CU Denver Electrical Engineering graduate program. In your application materials, mention your interest in the ASP Lab and highlight relevant mathematical/technical background.

Prospective MS students or undergraduate researchers interested in getting involved should email Dr. Parada-Mayorga directly with:

- Brief description of your background and interests

- CV/resume

- Unofficial transcript

We particularly encourage applications from students interested in pursuing PhD studies or those seeking research experience before graduate school.

Current Lab Members

Faculty

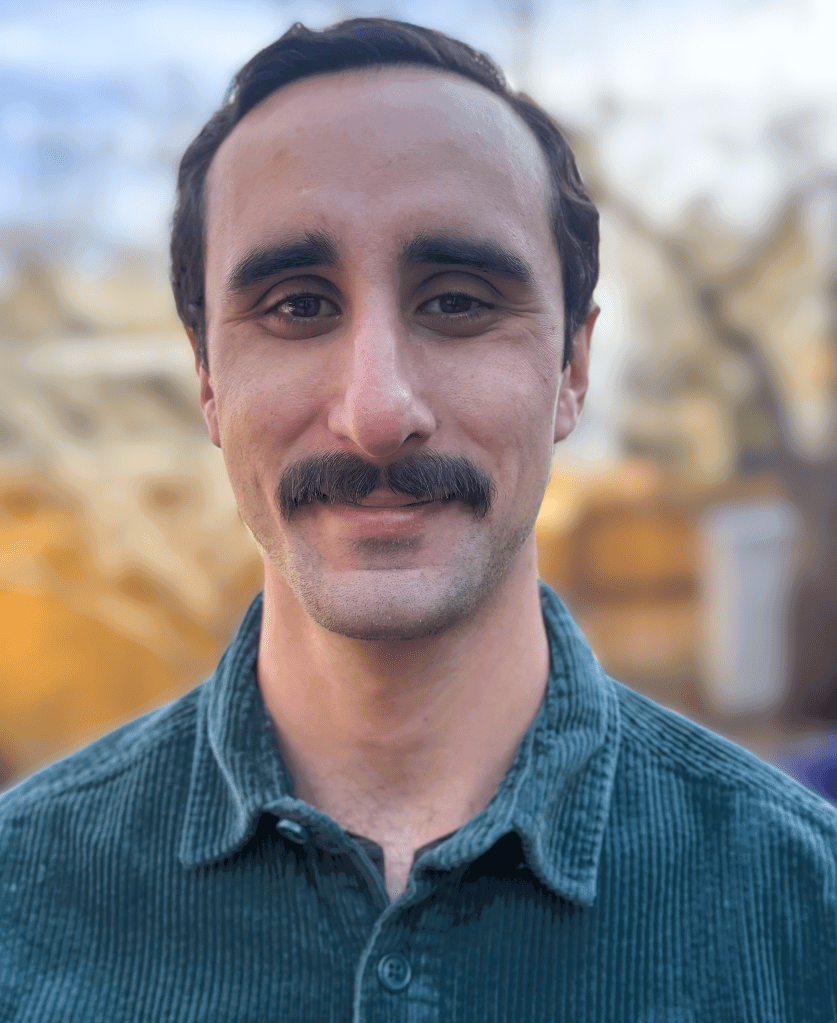

- Alejandro Parada-Mayorga, Ph.D.

Students

Tharon Stewart. Master student [August 2024 – Present]. Electrical Engineering at UC Denver. Research topic: Graph Signal Processing.

Gabriel (Gabe) Kelly-Ramirez. Undergraduate Student [Spring 2026-]. Electrical Engineering at UC Denver. Research topic: Physics-inspired Signal Processing in Machine Learning.

Alexander (Alex) Shotkoski. Undergraduate Student [Spring 2026-]. Electrical Engineering at UC Denver. Research topic: Physics-inspired Signal Processing in Machine Learning.

Sarah Hassanein. Undergraduate Student [Spring 2026-]. Electrical Engineering at UC Denver. Research topic: Graph Signal Processing.